In the rapidly evolving field of artificial intelligence, Meta has recently unveiled Llama 3, a groundbreaking large language model that promises to revolutionize the way we interact with and leverage AI technology. This powerful tool, trained on an unprecedented 15 trillion tokens of data, boasts remarkable capabilities in understanding and generating human-like text, making it a game-changer for a wide range of applications.

TL;DR

- Download and install Llama 3 from Meta's GitHub repository.

- Fine-tune the model on a code dataset to enhance its coding abilities.

- Develop a web application interface using Flask.

- Create a user-friendly frontend for your AI coding assistant.

What is Llama 3?

- Llama 3 is Meta's latest and most advanced large language model, renowned for its exceptional performance in natural language processing tasks.

- With its ability to understand and generate human-like text with remarkable accuracy, Llama 3 has set new benchmarks in areas such as logical reasoning, code generation, and creative writing.

- Want to test out the power of Llama 3? Chat with Anakin AI now! (which support virually any AI Model available!)

What is RAG (Retrieval-Augmented Generation)?

- RAG, or Retrieval-Augmented Generation, is a technique employed by Llama 3 that combines its language generation capabilities with external knowledge retrieval.

- By leveraging a vast corpus of information, RAG allows Llama 3 to provide more informed and contextually relevant responses, enhancing its overall performance and versatility.

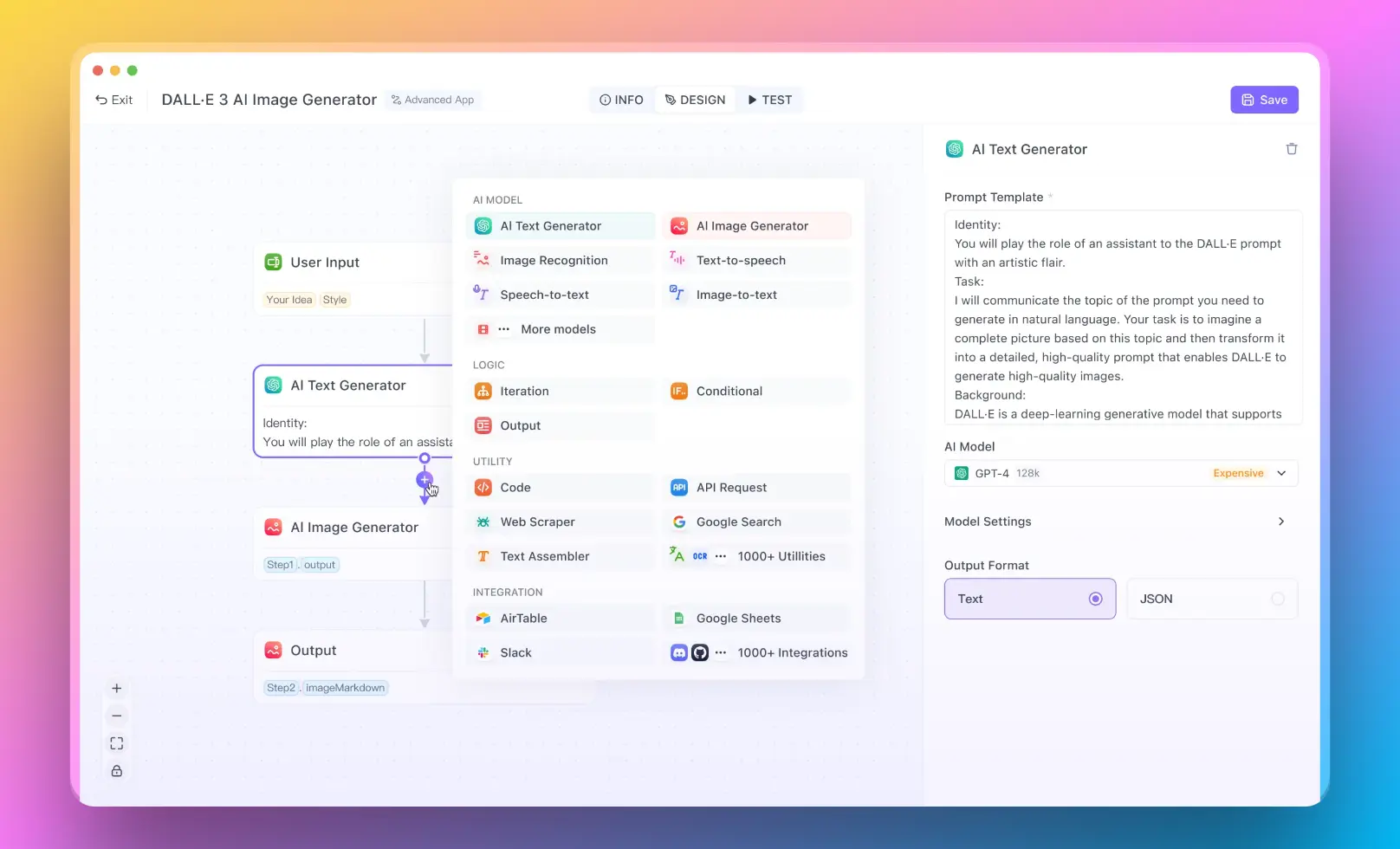

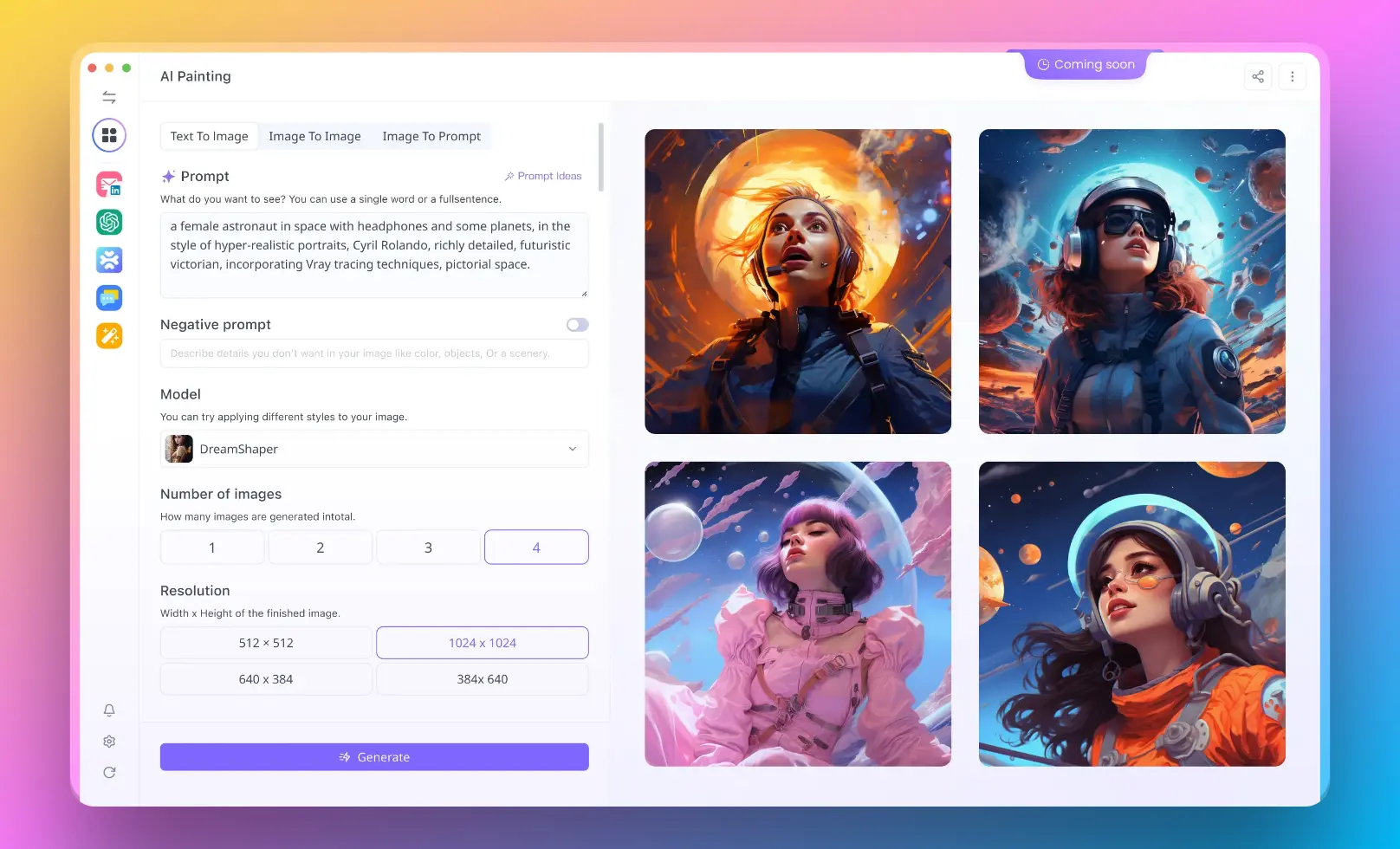

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

Use Anakin AI for Building No Code RAG Apps

- Anakin AI offers a no-code platform for creating AI-powered applications, including Retrieval Augmented Generation (RAG) systems.

- With its visual interface, you can build custom RAG solutions by connecting data sources, configuring retrieval and generation components, and fine-tuning language models – all without writing code.

- Anakin AI provides pre-built templates and collaboration tools, making it accessible to non-technical users. While less flexible than code-based approaches, Anakin AI enables efficient RAG development for businesses lacking AI expertise.

With all the information above, Let's get started!

Prerequisites

Before getting started, make sure you have the following:

- A computer with a NVIDIA GPU (8GB+ VRAM recommended)

- Linux or Windows 10/11 operating system

- Python 3.7+ installed

- Basic knowledge of Python and machine learning

Step 1: Install Llama 3

The first step is to install the Llama 3 language model on your local machine. Llama 3 is open-source and can be found on GitHub.

- Clone the Llama 3 repository:

git clone https://github.com/facebookresearch/llama.git

cd llama

- Install the required Python packages:

pip install -r requirements.txt

- Download the pre-trained Llama 3 weights. You'll need to fill out the form on the Llama 3 website to get access. Place the downloaded

.pthfile in thellama/modelsdirectory.

Step 2: Fine-tune Llama 3 on Code

Next, we need to fine-tune Llama 3 on a dataset of code so it learns to understand and generate source code.

- Prepare a dataset of code snippets and docstrings. A good option is the CodeSearchNet dataset. Download and extract the Python subset:

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/python.zip

unzip python.zip

- Use the

finetune.pyscript to train Llama 3 on the code dataset:

python finetune.py \

--base_model decapoda-research/llama-7b-hf \

--data_path python/train \

--output_dir python_model \

--num_train_epochs 3 \

--batch_size 128

This will fine-tune Llama 3 on the Python code dataset for 3 epochs and save the fine-tuned model to the python_model directory.

Step 3: Develop the Coding Assistant Interface

Now that we have a code-savvy language model, let's build a user interface for our AI coding assistant. We'll create a simple web app using the Flask framework.

- Install Flask:

pip install flask

- Create a new file called

app.pywith the following code:

from flask import Flask, request, jsonify

from transformers import AutoModelForCausalLM, AutoTokenizer

app = Flask(__name__)

model_path = "python_model"

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path)

@app.route('/complete', methods=['POST'])

def complete():

data = request.get_json()

code = data['code']

input_ids = tokenizer.encode(code, return_tensors='pt')

output = model.generate(input_ids, max_length=100, num_return_sequences=1)

generated_code = tokenizer.decode(output[0])

return jsonify({'generated_code': generated_code})

if __name__ == '__main__':

app.run()

This creates a Flask web server with a /complete endpoint. It loads the fine-tuned Llama 3 model and tokenizer, and uses them to generate code completions when a POST request is sent to /complete with the current code as JSON data.

- Run the Flask app:

flask run

Step 4: Create a Frontend UI

Finally, let's create a basic frontend for our coding assistant.

- Create a file called

index.htmlwith the following:

<!DOCTYPE html>

<html>

<head>

<title>AI Coding Assistant</title>

</head>

<body>

<h1>AI Coding Assistant</h1>

<textarea id="code" rows="10" cols="50"></textarea>

<br>

<button onclick="complete()">Complete Code</button>

<p>Generated Code:</p>

<pre id="generated-code"></pre>

<script>

function complete() {

var code = document.getElementById("code").value;

fetch('/complete', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({code: code})

})

.then(response => response.json())

.then(data => {

document.getElementById("generated-code").textContent = data.generated_code;

});

}

</script>

</body>

</html>

This creates a simple HTML page with a textarea where the user can enter their code, and a button to send the code to the /complete API endpoint. The generated code completion is then displayed below.

- Open

index.htmlin a web browser and start coding with the help of your AI assistant!

Deployment and Next Steps

Congratulations, you now have a working AI coding assistant powered by Llama 3! Some additional things to try:

- Deploy your app to a cloud platform like AWS or Heroku for others to use

- Expand the frontend with syntax highlighting, multi-file support, etc.

- Fine-tune Llama 3 on additional programming languages

- Experiment with alternative model architectures and hyperparameters

With the incredible capabilities of large language models like Llama 3, the possibilities are endless. I hope this guide has helped you get started with building your own AI coding tools. Happy coding!