The DALL-E 3 API has revolutionized the field of AI-driven image generation, offering unparalleled creativity and flexibility. Whether you're a developer, artist, or just an AI enthusiast, understanding how to effectively use the DALLE 3 API can unlock a world of possibilities. This article aims to demystify the process, guiding you through the essential steps and considerations for making the most out of this powerful tool.

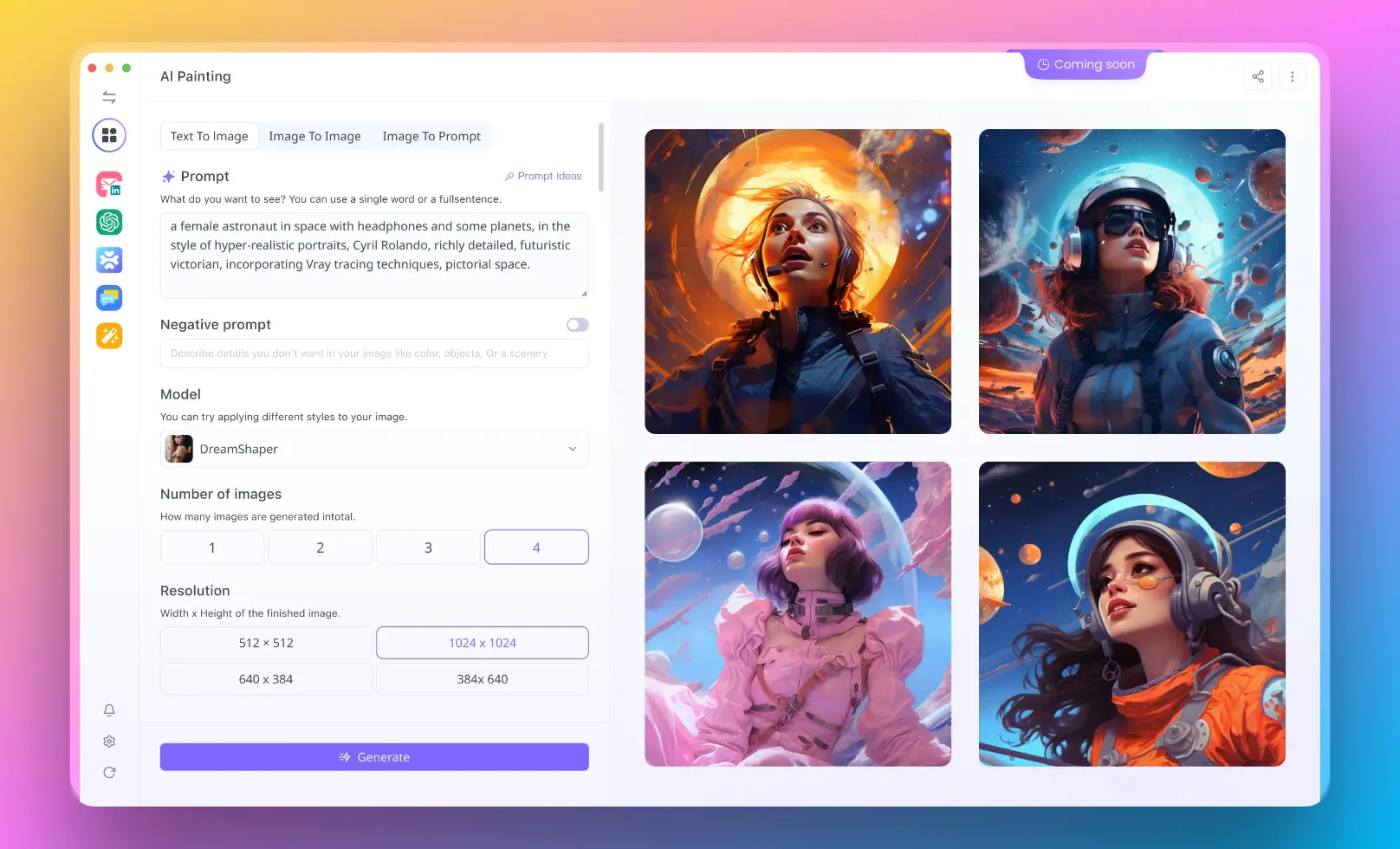

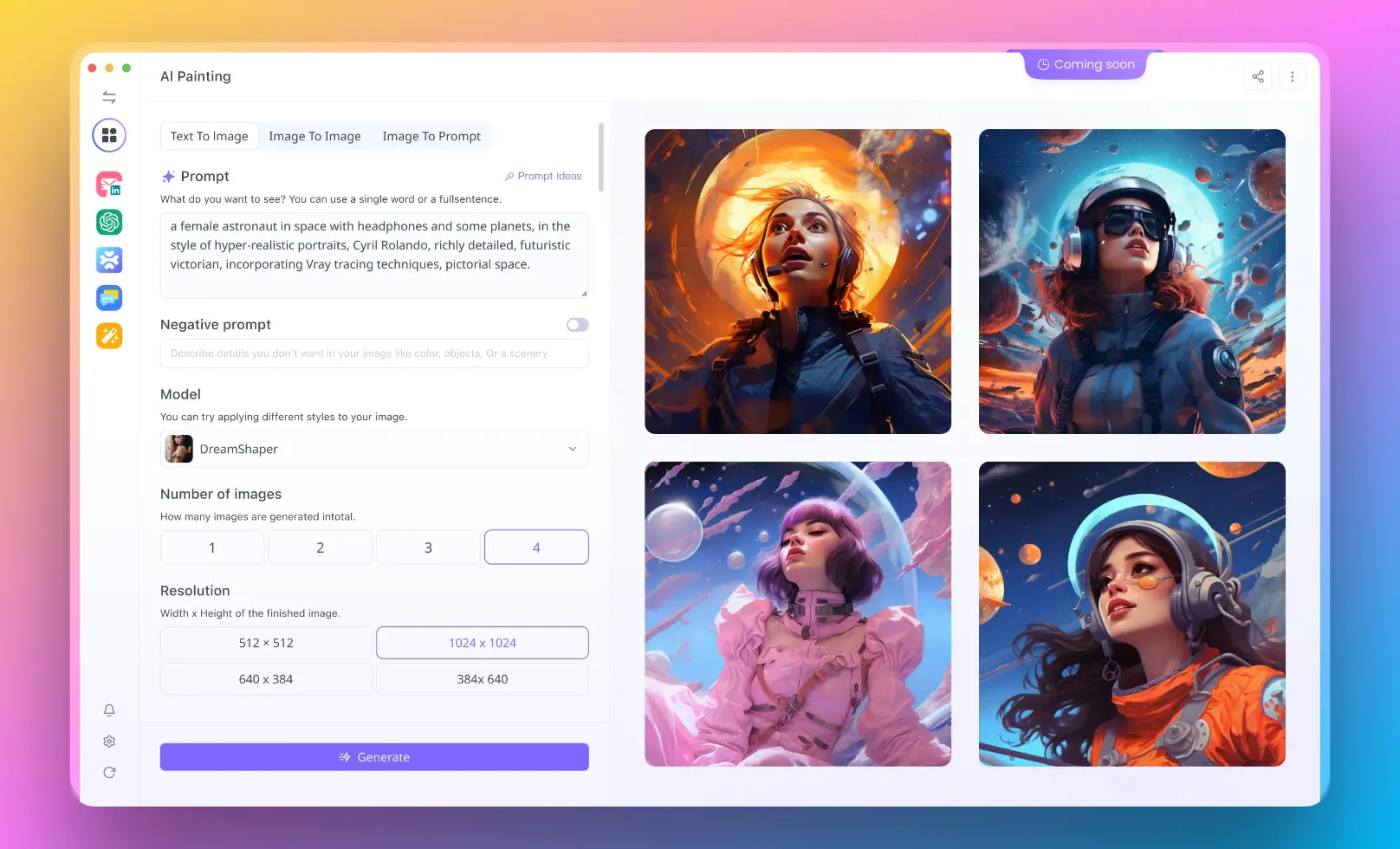

But before we get started with learning the steps, you might want to take a look at Anakin AI.

Having trouble with paying 100+ AI API bills? Anakin AI combines all of the together in 1 place! AI models like GPT, DALL-E, and Stable Diffusion. Here’s what makes Anakin AI stand out:

- All-in-One Platform: Easily access various AI models in one place, eliminating the need to manage multiple APIs or billing accounts.

- No Payment Hassle: Dive into AI without the complexities of setup and payment processes, making it more accessible to a wider audience.

- No Code APP Builder: Empower users to create AI-driven applications without any coding knowledge, opening up endless possibilities for innovation and creativity.

Anakin AI is your gateway to exploring and leveraging the power of artificial intelligence, making it easier than ever to integrate AI into your projects or business solutions.

What is DALL-E 3?

What is DALL-E-3? Simply put, it's an AI model that can create images from textual descriptions, providing an AI DALL E 3 experience that's both intuitive and powerful.

DALL-E 3, the latest iteration in OpenAI's DALL-E series, combines the prowess of GPT DALL E 3 with enhanced image generation capabilities. Unlike its predecessor, DALL-E 2, the DALLE 3 release introduces features like "DALL-E Vivid vs Natural" modes, offering users the ability to tailor the stylistic output of their images.

How Does DALL-E 3 Work?

The DALLE API works by interpreting textual prompts to generate images. This process, known as GPT DALL E 3 image generation, leverages advanced machine learning techniques to understand and visualize concepts described in DALL E prompts guide.

How to use DALLE 3 in ChatGPT involves integrating the API to seamlessly generate images within chat environments, showcasing the versatility of OpenAI DALLE3.

How to Access DALL-E 3 API:

To begin using DALL-E 3, you'll need to:

- Visiting the official OpenAI DALLE API website and signing up.

- Create an OpenAI Account and obtain an API key.

- Learn DALL-E API pricing and DALLE 3 cost are crucial considerations, as they determine the scale at which you can use the API for your projects.

The OpenAI API image generation capabilities can be accessed through various programming languages, with detailed tutorials DALL E available to guide you through the process.

You can read our tutorial to learn more about the steps to Use OpenAI API Key.

How to Use the DALLE3 API Key

There are three API endpoints with regard to DALLE3 API

- Generations: generates an image or images based on an input caption

- Edits: edits or extends an existing image

- Variations: generates variations of an input image

How to Setup DALLE3 API (Easily)

- Import the packages you'll need

- Import your OpenAI API key: You can do this by running

export OPENAI_API_KEY="your API key"in your terminal. - Set a directory to save images to

# imports

from openai import OpenAI # OpenAI Python library to make API calls

import requests # used to download images

import os # used to access filepaths

from PIL import Image # used to print and edit images

# initialize OpenAI client

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY", "<your OpenAI API key if not set as env var>"))

# set a directory to save DALL·E images to

image_dir_name = "images"

image_dir = os.path.join(os.curdir, image_dir_name)

# create the directory if it doesn't yet exist

if not os.path.isdir(image_dir):

os.mkdir(image_dir)

# print the directory to save to

print(f"{image_dir=}")

Image Generations with DALLE3 API

The generation API endpoint creates an image based on a text prompt.

OpenAI's Official API Reference for DALLE3 API

Required inputs:

prompt(str): A text description of the desired image(s). The maximum length is 1000 characters for dall-e-2 and 4000 characters for dall-e-3.

Optional inputs:

model(str): The model to use for image generation. Defaults to dall-e-2n(int): The number of images to generate. Must be between 1 and 10. Defaults to 1.quality(str): The quality of the image that will be generated. hd creates images with finer details and greater consistency across the image. This param is only supported for dall-e-3.response_format(str): The format in which the generated images are returned. Must be one of "url" or "b64_json". Defaults to "url".size(str): The size of the generated images. Must be one of 256x256, 512x512, or 1024x1024 for dall-e-2. Must be one of 1024x1024, 1792x1024, or 1024x1792 for dall-e-3 models. Defaults to "1024x1024".style(str | null): The style of the generated images. Must be one of vivid or natural. Vivid causes the model to lean towards generating hyper-real and dramatic images. Natural causes the model to produce more natural, less hyper-real looking images. This param is only supported for dall-e-3.user(str): A unique identifier representing your end-user, which will help OpenAI to monitor and detect abuse. Learn more.

# create an image

# set the prompt

prompt = "A cyberpunk monkey hacker dreaming of a beautiful bunch of bananas, digital art"

# call the OpenAI API

generation_response = client.images.generate(

model = "dall-e-3",

prompt=prompt,

n=1,

size="1024x1024",

response_format="url",

)

# print response

print(generation_response)

# save the image

generated_image_name = "generated_image.png" # any name you like; the filetype should be .png

generated_image_filepath = os.path.join(image_dir, generated_image_name)

generated_image_url = generation_response.data[0].url # extract image URL from response

generated_image = requests.get(generated_image_url).content # download the image

with open(generated_image_filepath, "wb") as image_file:

image_file.write(generated_image) # write the image to the file

# print the image

print(generated_image_filepath)

display(Image.open(generated_image_filepath))

Variations

The variations endpoint generates new images (variations) similar to an input image. API Reference

Here we'll generate variations of the image generated above.

Required inputs:

image(str): The image to use as the basis for the variation(s). Must be a valid PNG file, less than 4MB, and square.

Optional inputs:

model (str): The model to use for image variations. Only dall-e-2 is supported at this time.

n(int): The number of images to generate. Must be between 1 and 10. Defaults to 1.size(str): The size of the generated images. Must be one of "256x256", "512x512", or "1024x1024". Smaller images are faster. Defaults to "1024x1024".response_format(str): The format in which the generated images are returned. Must be one of "url" or "b64_json". Defaults to "url".user(str): A unique identifier representing your end-user, which will help OpenAI to monitor and detect abuse. Learn more.

# create variations

# call the OpenAI API, using `create_variation` rather than `create`

variation_response = client.images.create_variation(

image=generated_image, # generated_image is the image generated above

n=2,

size="1024x1024",

response_format="url",

)

# print response

print(variation_response)

# save the images

variation_urls = [datum.url for datum in variation_response.data] # extract URLs

variation_images = [requests.get(url).content for url in variation_urls] # download images

variation_image_names = [f"variation_image_{i}.png" for i in range(len(variation_images))] # create names

variation_image_filepaths = [os.path.join(image_dir, name) for name in variation_image_names] # create filepaths

for image, filepath in zip(variation_images, variation_image_filepaths): # loop through the variations

with open(filepath, "wb") as image_file: # open the file

image_file.write(image) # write the image to the file

# print the original image

print(generated_image_filepath)

display(Image.open(generated_image_filepath))

# print the new variations

for variation_image_filepaths in variation_image_filepaths:

print(variation_image_filepaths)

display(Image.open(variation_image_filepaths))

How to Edit Image Generated by DALLE3 API

The edit endpoint uses DALL·E to generate a specified portion of an existing image. Three inputs are needed: the image to edit, a mask specifying the portion to be regenerated, and a prompt describing the desired image. API Reference

Required inputs:

image(str): The image to edit. Must be a valid PNG file, less than 4MB, and square. If mask is not provided, image must have transparency, which will be used as the mask.prompt(str): A text description of the desired image(s). The maximum length is 1000 characters.

Optional inputs:

mask(file): An additional image whose fully transparent areas (e.g. where alpha is zero) indicate where image should be edited. Must be a valid PNG file, less than 4MB, and have the same dimensions as image.model(str): The model to use for edit image. Only dall-e-2 is supported at this time.n(int): The number of images to generate. Must be between 1 and 10. Defaults to 1.size(str): The size of the generated images. Must be one of "256x256", "512x512", or "1024x1024". Smaller images are faster. Defaults to "1024x1024".response_format(str): The format in which the generated images are returned. Must be one of "url" or "b64_json". Defaults to "url".user(str): A unique identifier representing your end-user, which will help OpenAI to monitor and detect abuse.

Set Edit Area in the DALLE3 API

An edit requires a "mask" to specify which portion of the image to regenerate. Any pixel with an alpha of 0 (transparent) will be regenerated. The code below creates a 1024x1024 mask where the bottom half is transparent.

# create a mask

width = 1024

height = 1024

mask = Image.new("RGBA", (width, height), (0, 0, 0, 1)) # create an opaque image mask

# set the bottom half to be transparent

for x in range(width):

for y in range(height // 2, height): # only loop over the bottom half of the mask

# set alpha (

A) to zero to turn pixel transparent

alpha = 0

mask.putpixel((x, y), (0, 0, 0, alpha))

# save the mask

mask_name = "bottom_half_mask.png"

mask_filepath = os.path.join(image_dir, mask_name)

mask.save(mask_filepath)

Perform Edit with DALLE3 API

Now we supply our image, caption and mask to the API to get 5 examples of edits to our image

# edit an image

# call the OpenAI API

edit_response = client.images.edit(

image=open(generated_image_filepath, "rb"), # from the generation section

mask=open(mask_filepath, "rb"), # from right above

prompt=prompt, # from the generation section

n=1,

size="1024x1024",

response_format="url",

)

# print response

print(edit_response)

# save the image

edited_image_name = "edited_image.png" # any name you like; the filetype should be .png

edited_image_filepath = os.path.join(image_dir, edited_image_name)

edited_image_url = edit_response.data[0].url # extract image URL from response

edited_image = requests.get(edited_image_url).content # download the image

with open(edited_image_filepath, "wb") as image_file:

image_file.write(edited_image) # write the image to the file

# print the original image

print(generated_image_filepath)

display(Image.open(generated_image_filepath))

# print edited image

print(edited_image_filepath)

display(Image.open(edited_image_filepath))

This section is a continuation of how to perform image edits using the DALL·E API, focusing on saving and displaying the edited images.

After obtaining the edited image from the DALL·E API, as shown in the previous steps, you can proceed to save the image to a designated directory and then display it for verification or further actions. The steps provided illustrate how to manage the image files effectively, ensuring they are accessible for any subsequent processing or review.

Save the Edited Image with DALLE3 API

Once you receive the response from the DALL·E API's edit endpoint, you can save the edited image using the following Python code snippet. This code demonstrates how to extract the image URL from the API response, download the image, and save it to a file within your specified directory.

# Save the edited image

edited_image_name = "edited_image.png" # Define the filename for the edited image

edited_image_filepath = os.path.join(image_dir, edited_image_name) # Create the full filepath

edited_image_url = edit_response.data[0].url # Extract the URL of the edited image from the response

# Download the image from the URL

edited_image = requests.get(edited_image_url).content

# Open the file in binary write mode and save the downloaded image

with open(edited_image_filepath, "wb") as image_file:

image_file.write(edited_image)

Display the Edited Image

After saving the edited image, you may want to display it within your Jupyter notebook to visually confirm the editing results. The following code snippet uses the Image class from the PIL package to load and display the edited image file.

# Display the edited image

print("Edited Image:")

display(Image.open(edited_image_filepath))

This section of the code reads the edited image from the file you saved earlier and displays it using the display function, which is particularly useful in Jupyter notebooks for inline visualization of images and other multimedia elements.

How to Optimize DALLE3 API Performance

Craft Effective Prompts

DALL E prompts guide plays a pivotal role in the quality of generated images. Understanding how to use DALL E 3 with Chat GPT involves mastering the art of prompt crafting, which can significantly affect the relevance and creativity of the output.

Using DALL-E 3 effectively means experimenting with different prompt styles and structures to achieve the desired results.

Manage DALLE3 API Costs

DALL-E pricing, including DALL E 3 pricing and DALL-E 2 pricing, varies based on usage. Managing your DALL E limit and understanding how much is DALL E or how much does DALLE 2 cost are essential for optimizing performance while keeping costs in check.

DALL-E dimensions and DALLE resolution also affect usage rates, offering a balance between quality and expenditure.

The Evolution from DALL-E 2 to DALL-E 3

The transition from DALL E 2 vs DALL E 3 showcases significant advancements in AI-driven image generation.

With each release, including the anticipated DALLE 3 release date, OpenAI has pushed the boundaries of what's possible, culminating in the highly versatile and capable DALL-E 3 OpenAI platform.

Conclusion

Before we finally conclude, you might want to take a look at Anakin AI.

Having trouble with paying 100+ AI API bills? Anakin AI combines all of the together in 1 place! AI models like GPT, DALL-E, and Stable Diffusion. Here’s what makes Anakin AI stand out:

- All-in-One Platform: Easily access various AI models in one place, eliminating the need to manage multiple APIs or billing accounts.

- No Payment Hassle: Dive into AI without the complexities of setup and payment processes, making it more accessible to a wider audience.

- No Code APP Builder: Empower users to create AI-driven applications without any coding knowledge, opening up endless possibilities for innovation and creativity.

Anakin AI is your gateway to exploring and leveraging the power of artificial intelligence, making it easier than ever to integrate AI into your projects or business solutions.

As we look towards the future, the potential for DALL-E 3 and subsequent models like DALL-E 2 API and DALL-E2 API continues to expand. The blend of DALL definition, E API, and E APIs within the OpenAI DALL-E API ecosystem suggests a future where AI's role in creative processes becomes increasingly central.

In conclusion, mastering the DALLE3 API opens up a vast canvas for digital creativity and innovation. By understanding how to use DALLE3, from accessing the API to crafting effective prompts and managing resources, users can explore new horizons in AI-driven art and design. Whether you're focused on using DALL-E 3 for professional projects or personal exploration, the journey into the world of AI-generated imagery promises to be both fascinating and rewarding.