Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Deepseek, OpenAI's o3-mini-high, Claude 3.7 Sonnet, FLUX, Minimax Video, Hunyuan...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Introduction

The artificial intelligence landscape is rapidly evolving, with powerful language models becoming increasingly accessible to developers and businesses. Among these, DeepSeek has emerged as a formidable competitor to established models like OpenAI's offerings, providing impressive reasoning capabilities at competitive prices. This comprehensive guide explores DeepSeek API's pricing structure, features, and how you can leverage Anakin AI to maximize its potential.

Understanding DeepSeek Models

Before diving into pricing details, it's essential to understand the various models offered by DeepSeek:

DeepSeek-R1

DeepSeek-R1 is the flagship reasoning model with exceptional capabilities in mathematics, coding, and logical reasoning. Key specifications include:

- 671 billion total parameters using Mixture-of-Experts (MoE) architecture

- Only 37 billion active parameters per token (for efficiency)

- Up to 128K tokens context length

- Open-source under MIT license

- Performance comparable to OpenAI's o1 model

DeepSeek-V3

DeepSeek-V3 represents the company's most advanced general-purpose offering:

- MoE architecture with 671 billion parameters

- Excellent for both general and specialized tasks

- Available in base and chat-optimized versions

Pricing Structure of DeepSeek API

DeepSeek offers a transparent and competitive pricing model designed to provide high-quality AI capabilities without breaking the bank.

DeepSeek-R1 Pricing

Standard Pricing:

- Input Tokens (Cache Miss): $0.55 per million tokens

- Input Tokens (Cache Hit): $0.14 per million tokens

- Output Tokens: $2.19 per million tokens

Context Caching System

A standout feature of DeepSeek's pricing model is its intelligent caching system:

- How it works: The system stores frequently used prompts and responses for several hours or days

- Cost savings: Up to 90% reduction in costs for repeated queries

- Automatic management: No additional setup or fees required

- Performance benefit: Reduced latency for cached responses

This caching mechanism makes DeepSeek particularly attractive for businesses handling large volumes of similar queries, as it can lead to substantial cost reductions over time.

DeepSeek-V2 Pricing

For those seeking more budget-friendly options, DeepSeek-V2 offers:

- Input tokens: Significantly cheaper than GPT-3.5-Turbo

- Output tokens: Competitive rates with similar quality

- No minimum spending requirements

Pricing Comparison

When compared to OpenAI's offerings:

| Model | Input Pricing (per M tokens) | Output Pricing (per M tokens) | Context Length |

|---|---|---|---|

| DeepSeek-R1 | $0.55 (Cache Miss) / $0.14 (Cache Hit) | $2.19 | Up to 128K |

| DeepSeek-V3 | Competitive rates | Competitive rates | Extended |

| OpenAI GPT-4 | $10.00 | $30.00 | 8K-32K |

| OpenAI o1 | Higher than DeepSeek-R1 | Higher than DeepSeek-R1 | Limited |

Getting Started With DeepSeek API

Implementing DeepSeek API into your applications is straightforward:

Step 1: Obtain API Key

- Visit the DeepSeek Developer Portal

- Create an account or log in

- Generate your API key

- Store it securely for use in your applications

Step 2: Set Up Your Environment

import requests

API_KEY = "your_api_key"

BASE_URL = "<https://api.deepseek.com>"

Step 3: Make Your First API Call

Using Python:

def query_deepseek(prompt):

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {API_KEY}"

}

data = {

"model": "deepseek-reasoner",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

"stream": False

}

response = requests.post(f"{BASE_URL}/chat/completions", json=data, headers=headers)

return response.json()

result = query_deepseek("Solve this math problem: What is the integral of x^2?")

print(result)

Using cURL:

curl <https://api.deepseek.com/chat/completions> \\\\

-H "Content-Type: application/json" \\\\

-H "Authorization: Bearer <your_api_key>" \\\\

-d '{

"model": "deepseek-reasoner",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum entanglement."}

],

"stream": false

}'

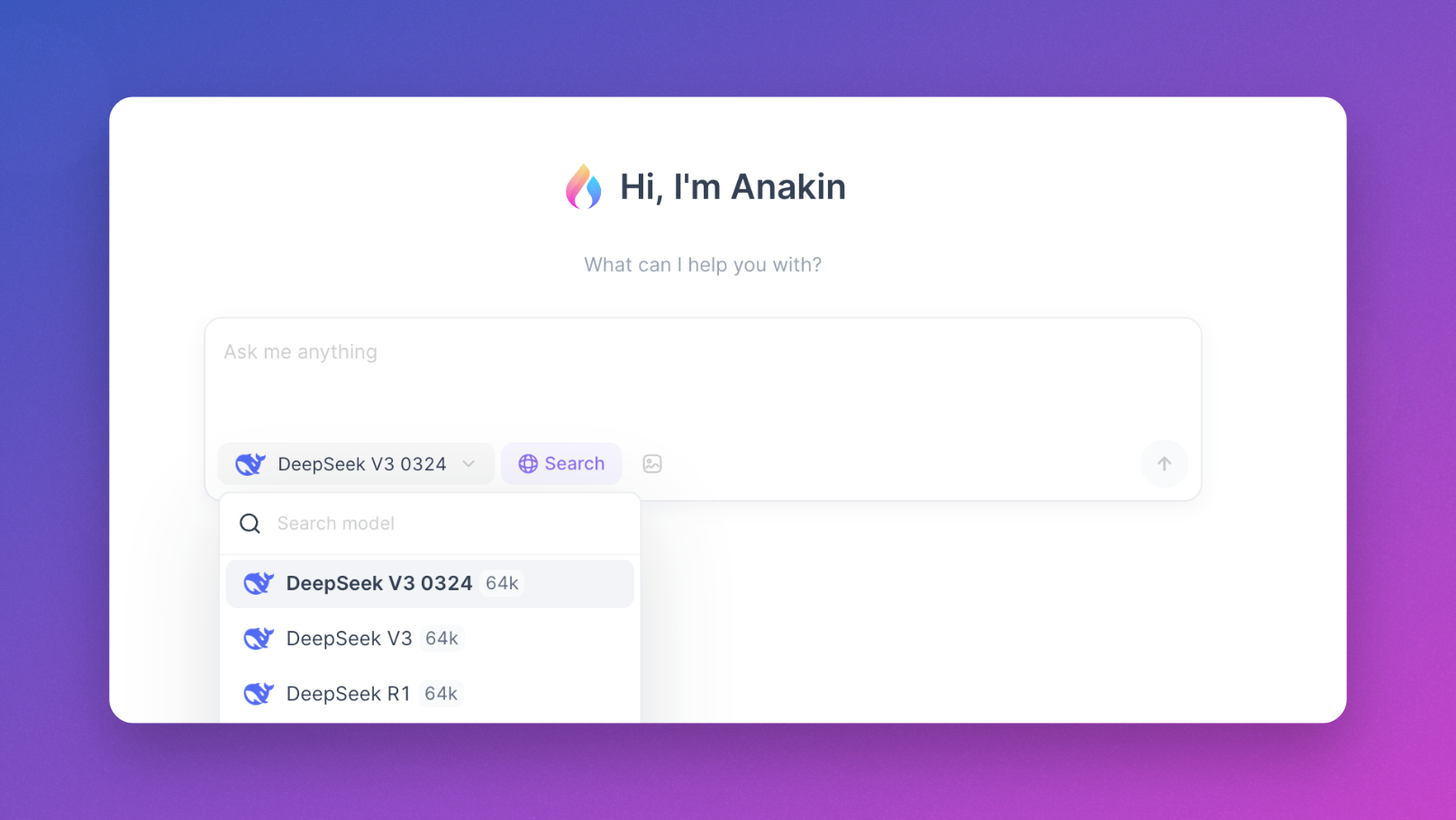

Leveraging Anakin AI with DeepSeek

While DeepSeek's API is powerful on its own, integrating it with Anakin AI unlocks even more capabilities and simplifies the development process.

What is Anakin AI?

Anakin AI is an all-in-one platform that allows you to:

- Create AI workflows without writing code

- Access 1000+ pre-built AI applications

- Integrate multiple AI models in a single workflow

- Manage AI costs efficiently through a credit system

Benefits of Using DeepSeek Through Anakin AI

- Simplified Integration: Use DeepSeek models without handling complex API configurations

- Multi-Model Workflows: Combine DeepSeek with other models like GPT, Claude, or Mixtral

- Visual Workflow Builder: Create complex AI processes through a drag-and-drop interface

- Cost Management: Use credits system instead of managing multiple API subscriptions

- Pre-built Templates: Access specialized templates for various applications

Getting Started with Anakin AI and DeepSeek

- Sign up: Create an account on Anakin AI platform

- Choose DeepSeek: Select the appropriate DeepSeek model for your needs

- Build Workflow: Use the visual interface to design your AI process

- Deploy: Launch your solution with a single click

Example Use Cases with Anakin AI and DeepSeek

- Enhanced Customer Service:

- Use DeepSeek-R1 for complex reasoning tasks

- Process customer queries through Anakin's workflow system

- Generate accurate and helpful responses at scale

- Content Generation Pipeline:

- Create outlines with DeepSeek-V3

- Generate detailed content based on outlines

- Review and refine output using specialized models

- Programming Assistant:

- Leverage DeepSeek-R1's coding capabilities

- Create workflows for code generation, explanation, and debugging

- Integrate with development tools through Anakin's interface

Cost Optimization Strategies

To maximize value when using DeepSeek's API, consider these strategies:

1. Leverage Context Caching

- Group similar queries to benefit from cache hits

- Structure applications to reuse context when possible

- Monitor cache hit rates to optimize performance

2. Optimize Prompt Engineering

- Keep prompts concise to minimize input token usage

- Use system prompts effectively to guide model behavior

- Balance context length against precision requirements

3. Choose the Right Model for the Task

- Use DeepSeek-R1 for complex reasoning and specialized tasks

- Consider DeepSeek-V2 for more general applications with budget constraints

- Match model capabilities to specific requirements

4. Implement Batching

- Process related requests together when possible

- Use asynchronous processing for better throughput

- Balance batch size against latency requirements

Advanced Features of DeepSeek API

Chain-of-Thought Reasoning

DeepSeek-R1 excels at breaking down complex problems into manageable steps:

- Detailed step-by-step problem-solving

- Self-verification of intermediate results

- Transparent thought processes in outputs

Context Length Utilization

With support for up to 128K tokens, DeepSeek-R1 can:

- Process extensive documents

- Maintain coherence across long conversations

- Handle complex multi-part instructions

Performance Optimization

Fine-tune performance by:

- Adjusting token lengths for complex queries

- Utilizing context caching for repeated prompts

- Optimizing system prompts for specific tasks

Conclusion

DeepSeek API offers a compelling combination of performance, flexibility, and cost-effectiveness. Its competitive pricing structure, particularly with the innovative context caching system, makes it an attractive option for developers and businesses looking to implement advanced AI capabilities.

By leveraging Anakin AI's intuitive platform alongside DeepSeek's powerful models, you can create sophisticated AI workflows without deep technical expertise. This combination provides the best of both worlds: DeepSeek's state-of-the-art language models and Anakin AI's user-friendly interface.

Whether you're building a customer service solution, developing content at scale, or creating specialized tools, the DeepSeek API, especially when combined with Anakin AI, offers the performance, flexibility, and cost-efficiency needed to bring your AI vision to life.

Start exploring DeepSeek's capabilities through Anakin AI today, and discover how this powerful combination can transform your approach to artificial intelligence implementation.