OpenAI has recently unveiled its latest flagship model, GPT-4o, a groundbreaking advancement in the field of artificial intelligence. This multimodal model is capable of reasoning across text, audio, and visual inputs, delivering real-time responses in various formats. In this article, we'll delve into the capabilities of GPT-4o, explore its differences from previous models, and provide a step-by-step guide on how to leverage its power through the OpenAI API.

Then, You cannot miss out Anakin AI!

Anakin AI gives you access to All AI Models at one place, with Claude, GPT-4O, Google Gemini, Uncensored LLMs, Stable Diffusion...

Try out the All-in-One AI API Platform, pay One Subscription for all your favourite AI Models!

If you are interested in deploying your own AI API server with Anakin AI, please contact us.

What is GPT-4o?

GPT-4o, or "GPT-4 Omni," is a significant leap forward in the realm of language models. Unlike its predecessors, which primarily focused on text-based inputs and outputs, GPT-4o can process and generate content across multiple modalities, including text, audio, and images. This multimodal approach opens up a world of possibilities, enabling more natural and engaging interactions between humans and AI systems.

One of the key advantages of GPT-4o is its ability to understand and reason about visual information. By incorporating images into your requests, the model can analyze and describe the content, answer related questions, and even generate new images based on the provided prompts.

Comparing GPT-4o with Other GPT Models

To better understand the capabilities of GPT-4o, let's compare it with other GPT models offered by OpenAI:

| Model | Description | Pricing | Rate Limits | Speed | Vision Capabilities | Multilingual Support |

|---|---|---|---|---|---|---|

| GPT-4o | Flagship multimodal model capable of handling text, audio, and visual inputs/outputs | 50% cheaper than GPT-4 Turbo ($5/M input, $15/M output) | 5x higher than GPT-4 Turbo (up to 10M tokens/min) | 2x faster than GPT-4 Turbo | Advanced vision capabilities, outperforming GPT-4 Turbo | Improved support for non-English languages |

| GPT-4 Turbo | Improved version of GPT-3.5, optimized for chat and text generation | - | - | - | Limited vision capabilities | - |

| GPT-4 | Large multimodal model accepting text or image inputs and outputting text | - | - | - | Advanced vision capabilities, but not as robust as GPT-4o | - |

| GPT-3.5 Turbo | Improved version of GPT-3, optimized for chat and text generation | - | - | - | No vision capabilities | - |

| DALL·E | Model specialized in generating and editing images based on natural language prompts | - | - | - | Specialized for image generation | - |

As you can see from the table, GPT-4o stands out with its superior performance, cost-effectiveness, and advanced capabilities compared to other GPT models. It offers faster processing speeds, higher rate limits, and improved support for non-English languages, making it a versatile choice for a wide range of applications.

Accessing GPT-4o through the OpenAI API

To leverage the power of GPT-4o, you'll need to access it through the OpenAI API. Here's a step-by-step guide on how to get started:

- Set up your environment: Ensure you have Python installed on your system, along with the OpenAI library. If you haven't already, you can install the OpenAI library using pip:

pip install openai

Obtain an API key: You'll need to obtain an API key from the OpenAI website. If you don't have an account, create one first. Once you have an account, navigate to the API Keys section and generate a new key.

Import the required libraries and set the API key: In your Python script, import the necessary libraries and set the API key as an environment variable:

import os

import openai

openai.api_key = "YOUR_API_KEY"

Replace "YOUR_API_KEY" with the actual API key you obtained from the OpenAI website.

- Make a text-only request: To start, let's make a simple text-only request to the GPT-4o API using the

openai.ChatCompletion.create()method:

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is the capital of France?"}

]

)

print(response.choices[0].message.content)

In this example, we're asking the model "What is the capital of France?". The messages parameter is a list of dictionaries, where each dictionary represents a message in the conversation. The first message sets the system's role, instructing the model to act as a helpful assistant. The second message is the user's query.

- Incorporate images: One of the key features of GPT-4o is its ability to understand and reason about images. To incorporate images into your requests, you need to provide the image data in the

messageslist:

import requests

from PIL import Image

from io import BytesIO

image_url = "https://example.com/image.jpg"

image_data = requests.get(image_url).content

image = Image.open(BytesIO(image_data))

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant that can analyze images."},

{"role": "user", "content": "Describe the image."},

{"role": "user", "content": image_data}

]

)

print(response.choices[0].message.content)

In this example, we first import the necessary libraries for handling images (requests and PIL). We then fetch the image data from a URL using the requests library and open the image using PIL. Finally, we include the image data as a separate message in the messages list.

- Handling audio and video inputs (coming soon): While the current version of the GPT-4o API supports text and image inputs, the ability to handle audio and video inputs is expected to be introduced soon. Once these features are available, you'll be able to incorporate audio and video data into your requests, similar to how we handled images in the previous example.

Advanced Usage of GPT-4o

The GPT-4o API offers a range of additional parameters and options to fine-tune the model's behavior and output. Here are a few examples:

Adjusting the Temperature and Top-P Parameters

The temperature and top_p parameters control the randomness and diversity of the generated output. Higher temperature values (between 0 and 2) will make the output more random, while lower values will make it more focused and deterministic. The top_p parameter (between 0 and 1) controls the nucleus sampling, where the model considers only the tokens with the highest probability mass.

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[...],

temperature=0.7,

top_p=0.9

)

Setting the Maximum Output Length

You can control the maximum length of the generated output by using the max_tokens parameter:

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[...],

max_tokens=100

)

Streaming Responses

For real-time applications, you can stream the model's responses as they are generated by setting the stream parameter to True:

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[...],

stream=True

)

for chunk in response:

print(chunk.choices[0].delta.content, end="")

This will print the generated text in real-time as it becomes available.

Here's a more relevant short section in Markdown with a bullet list and bold and italic text about using APIDog for API testing in the context of GPT-4o:

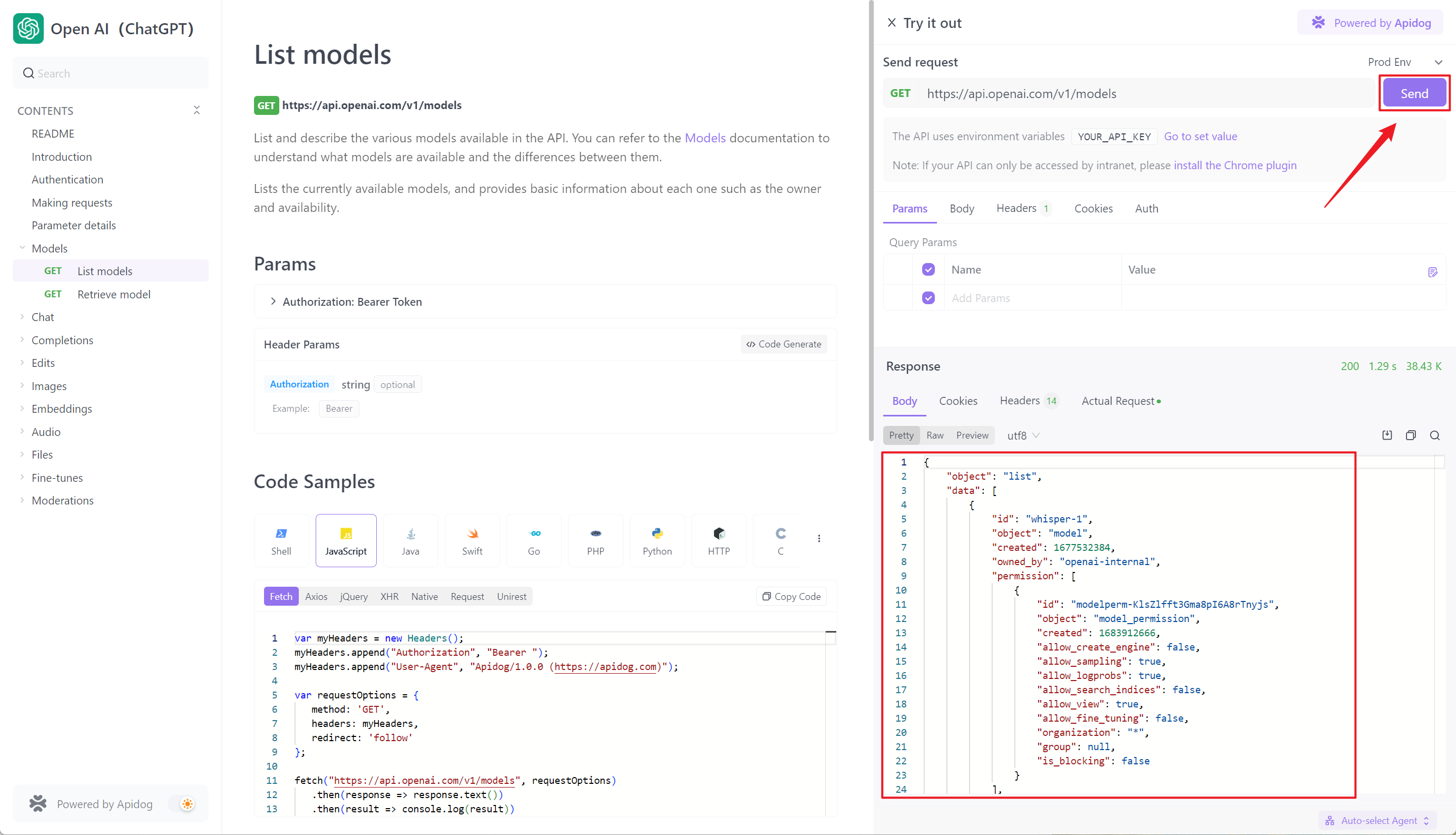

Testing GPT-4o API with APIDog

When working with the powerful GPT-4o API, it's crucial to ensure that your requests and responses are functioning as expected. APIDog is an excellent tool for testing and validating your interactions with the GPT-4o API. Here's how APIDog can help:

- Validate API requests: Use APIDog to create test cases that verify the correctness of your API requests to GPT-4o. Ensure that your requests include the necessary parameters, such as the model name, messages, and image data.

- Assert API responses: With APIDog, you can define assertions to check the structure and content of the responses received from the GPT-4o API. Verify that the generated text, images, or other media align with your expectations.

- Test edge cases: APIDog allows you to test various edge cases and scenarios, such as handling empty requests, invalid parameters, or rate limiting. Ensure that your application gracefully handles these situations when interacting with the GPT-4o API.

- Integrate with CI/CD: Incorporate APIDog into your continuous integration and continuous deployment (CI/CD) pipeline to automatically run API tests whenever changes are made to your codebase. This helps catch any regressions or issues early in the development process.

- Monitor API performance: Use APIDog to monitor the performance of your GPT-4o API requests. Track response times, error rates, and other metrics to ensure that your application is performing optimally when leveraging the power of GPT-4o.

By utilizing APIDog for testing your GPT-4o API integration, you can have confidence in the reliability and stability of your application. APIDog's intuitive interface and comprehensive testing capabilities make it an essential tool in your development workflow when working with cutting-edge AI technologies like GPT-4o.

Conclusion

GPT-4o represents a significant milestone in the field of artificial intelligence, offering unprecedented capabilities in multimodal reasoning and generation. By combining text, audio, and visual inputs, GPT-4o opens up new possibilities for more natural and engaging human-computer interactions.

In this article, we've explored the capabilities of GPT-4o, compared it with other GPT models, and provided a step-by-step guide on how to access and utilize its power through the OpenAI API. We've covered various aspects, including making text-only requests, incorporating images into your requests, and discussed the potential for handling audio and video inputs in the future.

As the field of AI continues to evolve, models like GPT-4o will play a crucial role in pushing the boundaries of what's possible and enabling new and innovative applications across various domains. Whether you're a developer, researcher, or simply curious about the latest advancements in AI, GPT-4o offers a glimpse into the future of human-computer interaction.

FAQ

Is GPT-4 free?

No, GPT-4 is not free to use. It is a powerful large language model developed by Anthropic that requires substantial computational resources to run. However, Anakin AI provides access to GPT-4 through their API, allowing developers and businesses to leverage this cutting-edge AI technology by paying for usage.

Will GPT-4 be free?

It is highly unlikely that GPT-4 will ever be completely free to use given the immense costs of training and running such a large AI model. Anthropic and companies like Anakin AI that provide access to GPT-4 need to recoup their investments and cover ongoing operational expenses. Free trials or limited free usage may be offered, but full unrestricted access will require a paid plan.

Is ChatGPT 4 free?

No, ChatGPT 4, which is based on the GPT-4 language model, is not free to use. While the previous ChatGPT was initially free during its research preview, ChatGPT 4 is a more advanced and expensive system. Anakin AI offers access to ChatGPT 4 through their API on a paid basis.

How do I access GPT-4?

To access GPT-4, you can sign up for Anakin AI's API services. This will provide you with the necessary keys and documentation to integrate GPT-4 into your applications and workflows. Anakin AI offers flexible pricing plans based on your expected usage and computational requirements for leveraging GPT-4's capabilities.