In the ever-evolving terrain of artificial intelligence, a groundbreaking development has just entered the scene. Meet Mamba: a state-of-the-art state space model architecture that promises to redefine the benchmarks for sequence modeling. This innovative model is not just a technological advancement; it's a paradigm shift, signaling a new era of efficiency and performance in machine learning.

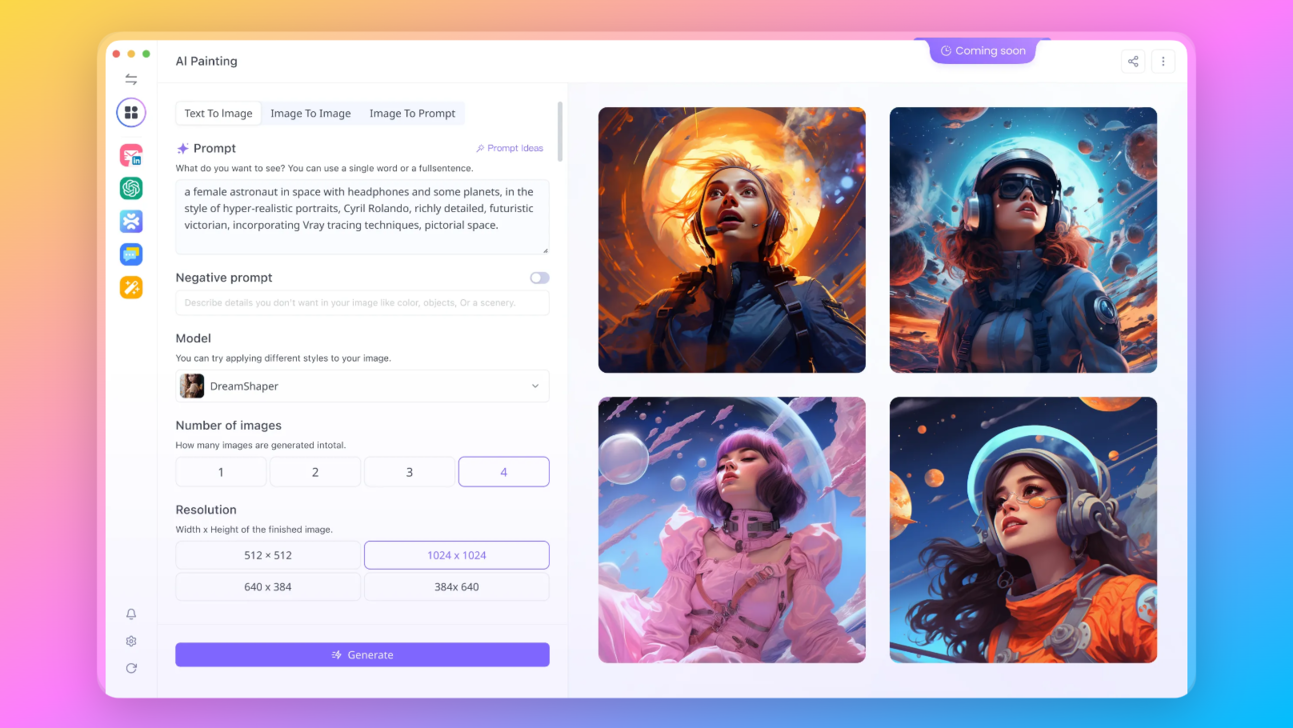

No worries. With Anakin AI, you can easily test out these awesome Language Models with one click!

Here are the other Open Source and Free Models that Anakin AI supports:

- Mistral 7B and Mixtral 8x7B

- Openchat

- RWKV v5

- MythoMist

- Zephyr

- Nous Capybara

- Google Gemini Pro

- GPT-4

- Claude-2.1 and Claude Instant

Didn't find your favourite model here? Join Anakin AI Discord to make a suggestion!

Anakin AI also supports AI Image Generation APIs such as:

- DALLE 3: Create stunning, high-resolution images from textual descriptions.

- Stable Diffusion: Generate images with a unique artistic flair, perfect for creative projects.

Interested in trying Mistral's Latest Models? Try it now at Anakin.AI!

What is Mamba?

Mamba is the brainchild of renowned researchers Albert Gu and Tri Dao, a state space model (SSM) that has been fine-tuned to excel in the processing of complex, information-dense data. Its design stems from the need for a more efficient method of sequence modeling, particularly in the realm of natural language processing, genomics, and audio analysis.

Quadratic attention has been indispensable for information-dense modalities such as language... until now.

— Albert Gu (@_albertgu) December 4, 2023

Announcing Mamba: a new SSM arch. that has linear-time scaling, ultra long context, and most importantly--outperforms Transformers everywhere we've tried.

With @tri_dao 1/ pic.twitter.com/vXumZqJsdb

Unpacking the Significance of Mamba

So, what sets Mamba apart in the crowded space of machine learning models? It's the combination of rapid linear-time scaling and a selective SSM layer that enables it to outperform existing models, particularly Transformers, which have been the go-to choice for many AI applications until now. Mamba's architecture is designed with a hardware-aware mindset, optimizing it for the high-powered computational resources available today.

- Linear-Time Scaling: Mamba's ability to handle sequences scales linearly with the sequence length, rather than quadratically like traditional models.

- Selective SSM Layer: At the core of Mamba is a selective state space layer that allows the model to selectively propagate or suppress information along the sequence, based on the input at each step.

- Hardware-Aware Design: Inspired by FlashAttention, Mamba's design takes into consideration the hardware it operates on, allowing for maximum efficiency.

By embracing the structured approach of state space models and refining it with a hardware-aware perspective, Mamba positions itself as a formidable tool in the arsenal of machine learning practitioners.

The Technical Prowess of Mamba

To truly appreciate the ingenuity behind Mamba, one must delve into its technical specifications. Designed to run on Linux systems with NVIDIA GPU support, it leverages the power of PyTorch 1.12+ and CUDA 11.6+ to deliver unmatched efficiency and performance. The installation process is streamlined with pip commands, making it accessible to a wide range of users, from academic researchers to industry professionals.

You can learn more by Reading the Mamba paper here:

Installation and Requirements of Mamba

Before diving into the capabilities of Mamba, one must understand the prerequisites and installation steps to harness its full potential. Here's a brief rundown for the technically inclined:

- Operating System: Mamba demands a Linux environment to thrive.

- Hardware Requirements: An NVIDIA GPU is non-negotiable, as Mamba is designed to leverage parallel processing to its advantage.

- Software Dependencies: Compatibility with PyTorch 1.12+ and CUDA 11.6+ is a must for running Mamba efficiently.

Mamba's Innovative Architecture and Design

Mamba's architecture is a testament to the innovative strides in machine learning. It introduces a selective state space model (SSM) layer that fundamentally changes how models process sequences. But what does this mean in practice?

The Core of Mamba: Selective SSM Layer

The selective SSM layer is the heart of Mamba. Unlike traditional sequence models that treat all input data uniformly, the selective SSM layer allows Mamba to:

- Focus on Relevant Information: By weighing the importance of each input differently, Mamba can prioritize information that is more predictive for the task at hand.

- Adapt to Input Dynamically: The model parameters adjust in response to the input, giving Mamba the agility to handle a wide range of sequence modeling tasks effectively.

The result is a model that can process sequences with unprecedented efficiency, making it an ideal candidate for tasks that involve long sequences of data.

Hardware-Aware Design: Optimized for Performance

Mamba's design philosophy is grounded in the understanding of modern hardware capabilities. It is engineered to fully utilize the computational resources of GPUs, ensuring:

- Optimized Memory Usage: Mamba's state expansion is crafted to fit within the high-bandwidth memory (HBM) of GPUs, reducing data transfer times and accelerating computation.

- Maximized Parallel Processing: By aligning its computations with the parallel nature of GPU processing, Mamba achieves a level of performance that sets a new standard for sequence models.

Installation and System Requirements for Mamba

To get started with Mamba, users need to navigate a few technical requirements. Here’s what you need to know:

Step-by-Step Installation Guide

- Ensure you have a Linux system with NVIDIA GPU support.

- Verify that PyTorch 1.12+ and CUDA 11.6+ are installed.

- Use the following commands to install Mamba and its necessary components:

pip install causal-conv1d

pip install mamba-ssm

Technical Requirements Breakdown

- Linux OS: A stable and compatible environment for running Mamba.

- NVIDIA GPU: For leveraging parallel computation capabilities.

- PyTorch 1.12+: Ensures compatibility with the latest machine learning libraries.

- CUDA 11.6+: A parallel computing platform required for running GPU-accelerated applications.

By meeting these requirements, users can unlock the full potential of Mamba, embarking on a journey of high-performance sequence modeling.

Utilizing Mamba: A Guide to Usage and Implementation

Utilizing Mamba is a straightforward process, thanks to its well-documented codebase and user-friendly APIs. Whether you are implementing a Mamba block or integrating it into a complete language model, the process is designed to be as seamless as possible.

Implementing the Mamba Block

The Mamba block is the building block of the architecture, wrapping around the selective SSM. Here’s a glimpse at how to implement a Mamba block:

- Define model dimensions and instantiate the Mamba module.

- Pass your data through the model to obtain outputs.

Each step in the implementation is backed by a comprehensive understanding of sequence modeling needs, ensuring that whether you are working with language, audio, or any sequence data, Mamba adapts to your requirements.

Crafting a Language Model with Mamba

Mamba shines in language modeling, offering a complete example of a language model built upon its architecture. By stacking Mamba blocks and coupling them with a language model head, one can create powerful models capable of understanding and generating text.

- Repeating Mamba Blocks: To deepen the model's understanding.

- Language Model Head: For generating predictions.

Through detailed code examples and extensive documentation, users are guided through the process of model creation, ensuring that even those new to the field can harness Mamba's capabilities.

Pretrained Models and Performance Metrics

Accessing Mamba's power doesn't require starting from scratch, thanks to a suite of pretrained models available on HuggingFace. These models, ranging from 130M to 2.8B parameters, have been rigorously trained on the Pile dataset, ensuring a comprehensive grasp of linguistic patterns.

Insights into Mamba's Pretrained Models

- Diverse Range: With models spanning various sizes, Mamba caters to different computational needs and performance benchmarks.

- Benchmarking Excellence: Mamba models follow the dimensional standards set by GPT-3, promising comparable or superior performance.

The real testament to Mamba's capabilities lies in its performance metrics. The model not only demonstrates improved efficiency in inference speed but also shows significant gains in accuracy across a spectrum of tasks.

Performance Expectations

- High Throughput: Mamba's inference process is notably faster, making it ideal for applications requiring rapid responses.

- Accuracy: In zero-shot evaluations, Mamba consistently showcases higher accuracy, indicating its robust understanding of the data.

Evaluating Mamba: Benchmarking and Zero-Shot Evaluations

Mamba's prowess is further substantiated through zero-shot evaluations. These evaluations measure a model's ability to apply knowledge to new tasks without prior training on those specific tasks.

Methodology for Zero-Shot Evaluations

- Utilize the lm-evaluation-harness library: This tool facilitates the evaluation process, allowing for a standardized assessment of Mamba's performance.

- Run a series of tasks: By testing Mamba on a variety of challenges, its versatility and robustness are thoroughly assessed.

Despite the inherent noise in the evaluation process, Mamba's results have been impressively consistent, underscoring its reliability.

Mamba in Practice: Inference and Real-World Applications

Mamba's real-world applications are as varied as they are impactful. From generating textual content to analyzing genomic sequences, the potential uses are vast.

High-Speed Inference with Mamba

- Rapid Prompt Completion: Mamba can swiftly generate completions for prompts, showcasing its quick inference capabilities.

- Batch Processing: The model excels in processing large batches of data, maintaining high accuracy and speed.

Mamba's practicality is not just theoretical. It is evidenced in its deployment in various domains, demonstrating its adaptability and efficiency.

The Future of Mamba in AI

Mamba's introduction into the AI ecosystem has stirred excitement and speculation about its future impact. Its ability to handle lengthy sequences with ease and its high-performance benchmarks suggest that Mamba could play a pivotal role in the development of advanced AI systems.

Community Reactions and Expert Opinions

- Enthusiastic Reception: The AI community has welcomed Mamba with open arms, intrigued by its capabilities.

- Expert Validation: Specialists in the field have acknowledged Mamba's potential, predicting a shift in how sequence modeling is approached.

As the article concludes, it becomes clear that Mamba is not just another model; it is a harbinger of the future of sequence modeling, setting a new standard for what is possible in the realm of AI.

The Road Ahead for Mamba and AI

As the AI community peers into the horizon, Mamba stands out as a beacon of innovation, signaling new possibilities for tackling complex sequence modeling challenges. Its introduction is more than just a technical milestone; it's a shift towards more efficient, scalable, and intelligent systems that can understand and process sequences with unprecedented depth.

Embracing the Potential of Mamba

The potential applications of Mamba are as vast as the data sequences it can analyze. From real-time language translation to unraveling the mysteries within our DNA, Mamba's capabilities can be harnessed across various domains:

- Healthcare: Accelerating genomic data analysis could lead to breakthroughs in personalized medicine.

- Finance: Analyzing market trends over long periods to predict stock movements with greater accuracy.

- Customer Service: Powering chatbots that can maintain context over long conversations for improved user experiences.

Community Involvement and Collaborative Growth

The success of Mamba hinges not only on its technical prowess but also on the community's engagement. Open-source contributions, shared pretrained models, and collaborative research are key drivers that will determine Mamba's trajectory in the AI landscape.

- Open-Source Contributions: Encouraging developers and researchers to contribute to Mamba's codebase can lead to more robust and versatile models.

- Shared Resources: By sharing pretrained models, the community can benefit from collective knowledge and efforts, accelerating progress.

- Collaborative Research: Joint ventures between academia and industry can push the boundaries of what Mamba can achieve.

Mamba's Role in Advancing AI

Mamba is poised to play a significant role in the advancement of AI technologies. Its efficiency and performance set the stage for the development of more sophisticated models and applications, potentially leading to the next wave of AI breakthroughs.

- Foundation for Future Models: Mamba could serve as the underlying architecture for the next generation of state-of-the-art AI models.

- Enabling Longer Contexts: With its ability to handle long sequences, Mamba opens the door to more context-aware AI, resulting in more nuanced and intelligent systems.

Conclusion: Mamba as a Cornerstone of Modern AI

Mamba is not just an incremental update to existing sequence models; it is a redefinition of what's possible. Its arrival marks a new chapter in the annals of AI, where limitations of sequence length and computational inefficiency are becoming relics of the past.

In the span of a few years, we've witnessed the transition from RNNs to Transformers, and now to Mamba, each leap bringing us closer to AI that can think and process information with human-like depth. Mamba's linear-time scaling and selective state space approach embody the innovative spirit that drives the field of AI forward.

As researchers, developers, and enthusiasts rally around Mamba, it's clear that this is more than just a technological triumph—it's a collective step towards a future where AI can seamlessly integrate into every aspect of our digital lives, making the most complex sequence modeling tasks seem effortless.

In essence, Mamba is not the end of the journey; it's a promising beginning. It's a model built not just for the present but for the future—a future where AI's potential is boundless, and its impact is profound.

Try Anakin AI, where you can easily create AI Apps with the latest GPT-4, Claude, Stable Diffusion, DALL-E 3 Models with ease!

No need for complicated coding, simply fire up the Anakin AI console, and you are ready to go!